05 Nov

Introduction:

AWS Auto Scaling is one of the most powerful features and reasons to move your applications to the cloud, as it helps you in building resilient architecture and reduce the cost of maintaining infrastructure with the highest capacity needed.

However, there are a few items to consider when building an auto-scaling architecture, to ensure you get the optimal benefits of it.

When to Scale?

The most common way to scale is based on CPU usage, is by defining a threshold to specify when to add new instance and when to terminate, however, you can configure other criteria, for example, memory usage, disk IO, and even you can define your custom metric like a number of the webserver requests. so it depends on where you see the bottleneck on your environment on high load.

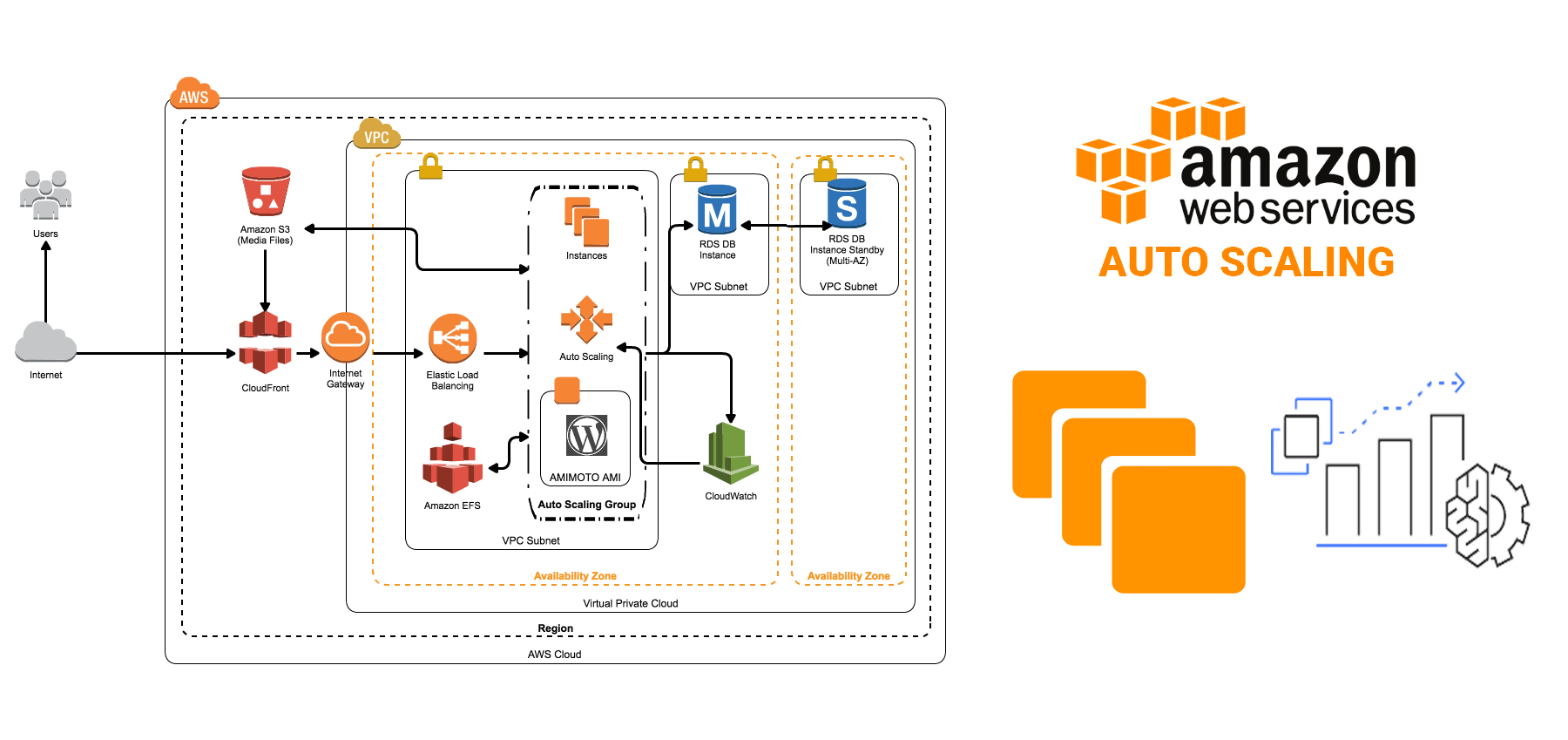

Which Load Balancer to choose?

AWS Auto Scaling provides 3 types of load balancer, Classic, Application, and Network load balancers, the main difference is on which layer they operate on. the classic load balancer is blind where it just forwards the traffic to instances without inspecting the traffic. however, an Application load balancer is smart and gives you the ability to configure routing for your requests.

The network load balancer can be used for high-performance applications, you can assign an IP to, however it does not support session stickiness.

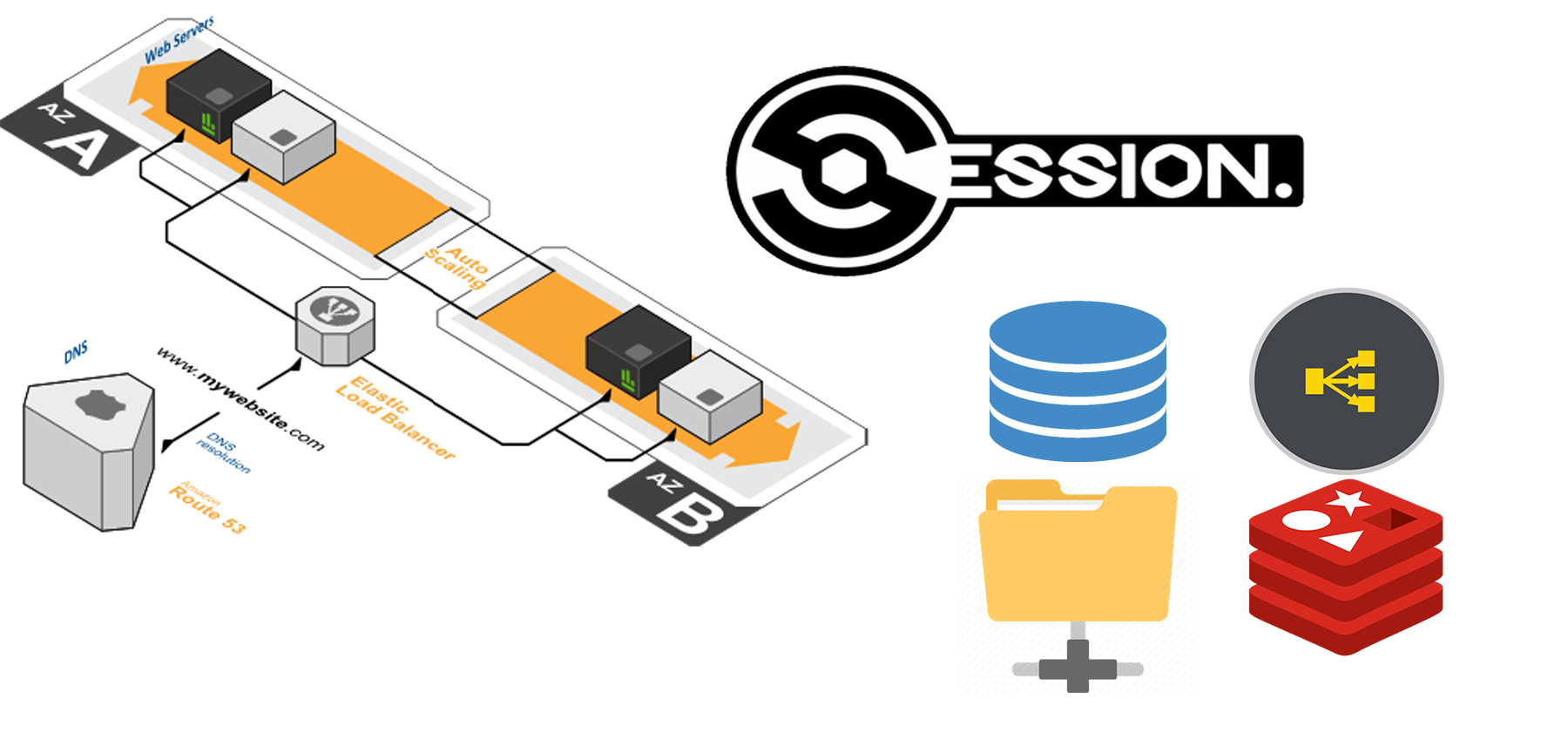

How to Centralize shared Storage?

this is usually where you need to place the dynamic content, like media, attachments, and even config files where it can be accessed or modified by any of the instances. the most common way to implement it is by using an Elastic File System (EFS), which is a network file system that can be mounted on autoscaled instances at once.

You can implement file synchronize using sync tools such as rsync, unison, or osync but we don’t recommend it for the environment for more than 5 instances.

How to Manage Sessions?

We discussed in the previous article on how to manage your session under aws auto scaling or multi-server environment, we highly recommend you to check this article to pick the approach that fits your application.

IP Address Forwarding?

since requests are received by the load balancer, it will be forwarded with load balancer IP to the ec2-server, however, it will still carry the visitor IP but on the different header, to find the real IP for visitor please check this article.

Where to locate my Database?

AWS offers many DB flavors to be used in your application like Amazon RDS for relational databases such as MySQL, Aurora, Postgres, SQL Server Oracle, or nonrelational DBs like Amazon DynamoDB. so you would need to move your DB from being located on the same application server to a centralized location.

Concerned About Cost?

You can incredibly reduce the cost of auto-scaled instances by using spot instances and combine it with On-Demand instance. this feature is available on the aws auto scaling definition. also, it’s highly recommended setting billing alarms to eliminate any unexpected cost, like misconfiguration or DDOS attacks.

How to set proper access rules?

You wanna make sure all traffic is only accessible through the load balancer, how? well, first you can place your application servers under private subnet, another nice option is to configure the application servers security group to allow HTTP and HTTPS traffic from the load balancer security group only.

Need a Centralized Logs Storage?

It is not easy to tracklogs on an autoload balancer environment, because you need to check logs for each server and see if that failed request hit it or no. also you may lose logs on the terminated instances from autoscaling scale in actions, the best way is to implement centralized log storage like AWS Cloudwatch where you can see all logs at one location, define alarms, and do powerful queries. for more info about this topic, you can check the AWS cloud watch log agent and how to configure it.

AWS Auto Scaling Deployment Management

AWS CodeDeply service has solid integration with AWS auto scaling environments, as it can detect when a new instance has been instantiated and it created automatic deployment using the latest deployment artifact. also, you can assign a deployment group to load balancer and code deploy will take care of removing the instance from the load balancer and block traffic during the deployment process and send it back when deployment completed.

Conclusion:

there are quite a few items to consider when building an auto-scaling environment, they are easy to implement though, thanks for AWS for making our lives easier 🙂

AWS PS has been implementing aws auto scaling architectures for years and has extensive experience in this field, feel free to contact us for a free quote.